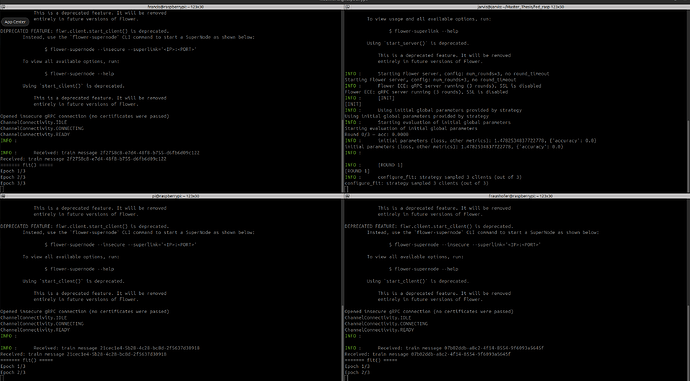

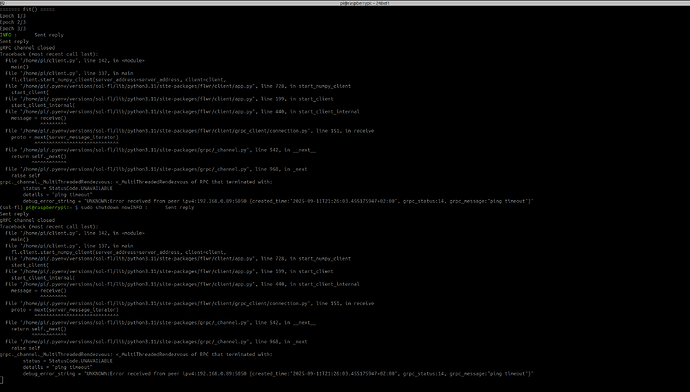

I am trying to train Distilbert with custom classifier head using pytorchin federated network with three raspberry pie as clients , keeping the base frozen and training only classifier head in reduce the parameter size and quantity that is exchanged, but for some reasons my evaluation function fails and clients get pinged out of the gPRC network causing the network to crash and opt out i tried without implement the evaluation agrregation function to evaluate the updated model with local dataset , but still it the clients gets pinged out of the network , but first round of training just go fine ,but second it crashes please find the attached screenshot for more details I use flower 1.13

Hello @tomyfrancis25 , I see from the logs you are using a deprecated components of Flower (i.e. using start_client(), likely you are also using start_server() which is also deprecated). The recommended way of using flower is via flwr run. We have a tutorial for embedded devices that you may want to follow here: flower/examples/embedded-devices at main · adap/flower · GitHub

@tomyfrancis25, what’s different in round 2 compared to round 1? is there more training happening in round 2 or something making the RPi devices do more work, potentially crashing?

@pan-h , do you know what could be causing the ping timeout ?

in the strategy arguement i specified

[ strategy = fl.server.strategy.FedAvg( fraction_fit=1, fraction_evaluate=0, min_fit_clients=1, #min_evaluate_clients=3, min_available_clients=1, initial_parameters=initial_parameters, #on_fit_config_fn=fit_config, #on_evaluate_config_fn=evaluate_config, #evaluate_fn=get_evaluate_fn(model), )]

i specified minimum fit client as 1 on the first round only one client is sampled but on the second all three are getting samples when they are sampled the gprc network crashes i suspect that someting wrong the evaluation of aggregated model using local data which causing this def evaluate(self, parameters, config):

print("======= evaluate() =====")

set_weights(self.model, parameters)

loss, accuracy = test(self.model, self.testloader, self.device)

total_examples=len(self.testloader.dataset)

if total_examples==0:

return 0.0,0 ,{"accuracy": 0.0, "note":"no test data"}

return float(loss), len(self.testloader.dataset), {"accuracy": float(accuracy)}

Hallo Javier,

this is new the status ,i cannot train 3 raspberry pie’s simultaneously, so in my case during first round 2 were sampled out of 3 and 2 were used for aggregation and evaluation during 2nd round 3 were sample but one was dropped ,but two of them were agregated and evaluated ,3 rd round on the other hand resulted in all three getting failed, a github post i found states that swithing to grpc package 1.60.0 could somehow solve the issue, is it possible to deprecate grpcio package in flower and i use flower 1.13.0 which is also old version.

hallo @javier , do you know why this happens, i am doing my master thesis and i am practically stuck at this

Hi @tomyfrancis25 , I think you can try to upgrade the grpcio package. As far as I understand, grpcio is backward compatible.

However, upgrading the gRPC version may not work. To resolve this issue, would you mind trying to spend a few minutes to migrate to the new format following Upgrade to Flower 1.13 - Flower Framework? I noticed that you are still using start_client, which is not recommended. The issue you saw is because our deprecated API start_client and start_server still use gRPC bi-directional streaming channel, which is vulnerable to network instability, triggering the gRPC error you saw. The new flower-superlink flower-supernode approach will use a robust unary-unary gRPC channel. Plus, I personally would recommend you to upgrade to newer versions of Flower to get all new powerful features.

@pan-h Thanks for the reply, I will post here the results ones the upgrade is made.![]()

i was returning accuracy as list which was causing local eval to fail,now it is working fine,thanks for the help,because parameter expects scalar values. @javier @pan-h

This topic was automatically closed 7 days after the last reply. New replies are no longer allowed.