I’m running federated learning with Flower where I have more clients configured than my hardware can run concurrently, and I’m not seeing the behavior I expected.

My Setup

-

8x NVIDIA H100 GPUs

-

32 federated clients configured (

num-supernodes = 32) -

Each client allocated 0.333 GPU (

num-gpus = 0.333) -

fraction-fit = 1.0(expecting all clients to participate each round) -

Using Flower 1.19.0 with Ray backend

What I Expected

With 32 clients configured but only resources for ~20 to run simultaneously, I expected that each round would:

-

Start 20 clients on available GPUs

-

Queue the remaining 12 clients

-

As the first clients finish, the queued ones would get GPU resources and train

-

The round would complete after all 32 clients had trained

What I’m Observing

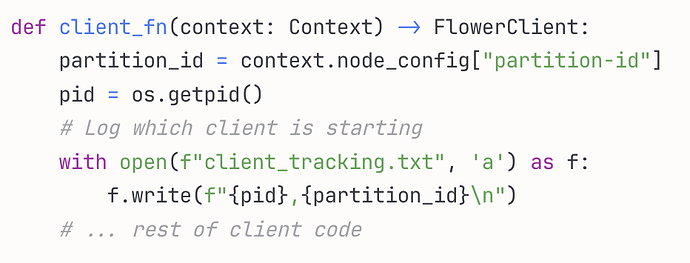

To track which clients actually run, I added logging to client_fn:

After running 10 rounds:

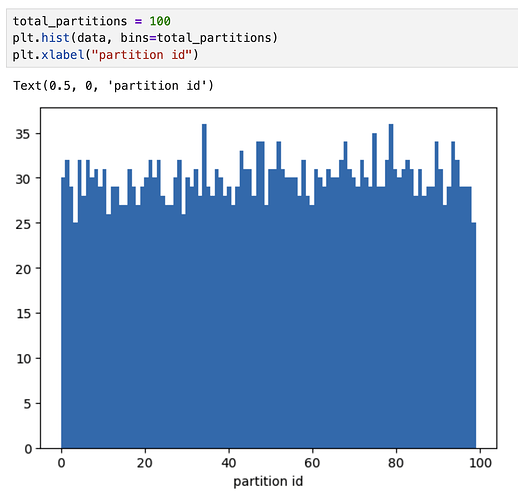

-

The tracking file shows all 32 clients (partition-id 0-31) register initially

-

GPU monitoring over the entire training period shows only 20 unique client processes ever get GPU resources

-

The same 20 partition IDs run every round

-

The other 12 partition IDs (0,1,2,5,9,12,14,21,22,25,27,28) never execute beyond their initial registration

Even after 10 rounds of training (over 75 minutes), those 12 clients never get scheduled to run. They seem to register at startup but then never actually train.

My Question

Should Flower be scheduling those 12 queued clients to run as GPU resources become available within each round? Or is there something in my configuration preventing the queueing/rotation from working? I expected all 32 clients to take turns training each round (even if it takes longer), but instead the same 20 clients train every round while 12 never run at all.

Thanks for any insights on how client scheduling works when resources are oversubscribed!